An information security research team has developed an artificial intelligence system capable of creating short stories, newspaper articles or poetry from a few words written by any individual; many experts have already analyzed it and consider this tool to write texts in a “human-like” way.

This text generator software, developed by the firm OpenAI, was released some time ago, although it was then considered a tool too powerful to be used massively due to its possible uses for malicious purposes. Now, with the new updates, it is possible to use this tool to create hundreds of fake news sites and massive spam on social media.

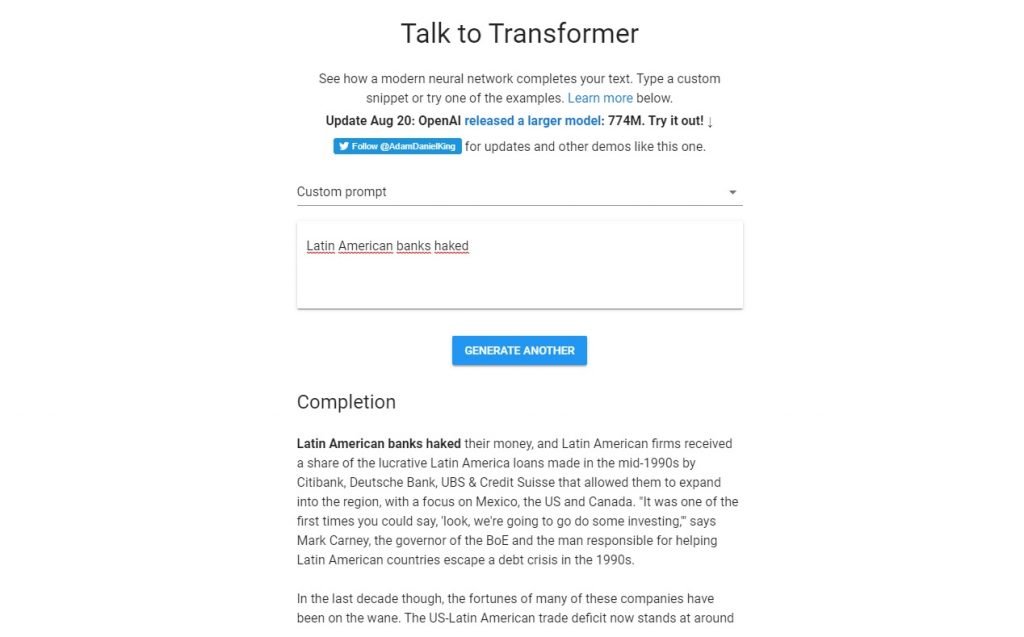

Some information security experts members of different cybersecurity firms and institutions decided to try this tool. The new version, known as GPT-2, is a trained software that integrates a data set of more than eight million websites and is able to adapt to any style of text, it is sufficient for the user to enter the interface a few words to get a full text. “From a few words, this tool is capable of completing poems, journalistic texts or editorial notes,” the experts said.

After releasing the first version, and in the face of possible malicious uses of the tool, OpenAI mentioned: “We are concerned about the possible malicious application of this technology, so we will not launch the trained model. The currently available version is a much smaller model, released for research purposes”.

A couple of weeks ago, the firm decided to expand the testing version, expanding the database to train the software. After analyzing this extended version, information security experts considered that the tool works well enough to use it to generate consistent, well-written text from artificial intelligence.

Although it is an incredibly powerful development, many members of the cybersecurity community are unoptimistic about the uses of this tool. “I am terrified to think that GPT-2 is that kind of technology that a malicious person uses to lie, manipulate or misinform the population; this possibility makes technology the most powerful weapon,” says Tristan Greene, a cybersecurity specialist.

On the other hand, information security specialists at the International Institute of Cyber Security (IICS) believe that, should this project work as expected by the developer firm, it would be ridiculously easy to create fake content, spam and clickbait, an undesirable scenario given the scope that this kind of content can have thanks to its exposure on web pages, social media and so on, as well as exposing users to phishing or malware-infested websites.

“Fortunately, for now this tool generates readable but untrustworthy content compared to real news or writing platforms; an auto-generated text is perfectly identifiable compared to text written by a human being, especially when working with words with more than one possible application,” Greene adds.

OpenAI was founded in 2015 as a non-profit organization intended to drive the development of artificial intelligence for the benefit of the world. The famous entrepreneur Elon Musk even participated in its foundation, although he has long since stopped collaborating with this firm.

Below is an example of a text automatically generated by the tool using only four words:

He is a well-known expert in mobile security and malware analysis. He studied Computer Science at NYU and started working as a cyber security analyst in 2003. He is actively working as an anti-malware expert. He also worked for security companies like Kaspersky Lab. His everyday job includes researching about new malware and cyber security incidents. Also he has deep level of knowledge in mobile security and mobile vulnerabilities.