Introduction

For doing pentesting of any website, we either have to use some automatic or manual technique to find all URL’s of target with parameters. Once we are able to get all the parameters, we are ready to start Fuzzing on the parameters. Researcher of International institute of cyber Security suggests about Fuzzing, it is an automated software testing technique wherein we insert random data into a parameters to find out the loophole or to discover the errors in the website.

ParamSpider is used to crawl all the parameters in the target URL by using the internet archive. Using this we can find the .jpg, .png, .svg, and text files by specifying the extension. We mainly set the parameters to scrap the target domain, this tool uses the default Fuzzing technique. This tool is simple, easy to use and moreover we can also find vulnerable URL using this tool. This tool is an open-source for web hacking.

Environment

- OS: Kali Linux 2020 64 bit

- Kernel-Version: 5.6.0

Installation Steps

- Use this command to clone the project.

- git clone https://github.com/devanshbatham/ParamSpider

root@kali:/home/iicybersecurity# git clone https://github.com/devanshbatham/ParamSpider Cloning into 'ParamSpider'... remote: Enumerating objects: 219, done. remote: Counting objects: 100% (219/219), done. remote: Compressing objects: 100% (151/151), done. remote: Total 219 (delta 117), reused 138 (delta 61), pack-reused 0 Receiving objects: 100% (219/219), 181.94 KiB | 185.00 KiB/s, done. Resolving deltas: 100% (117/117), done.

- Use the cd command to enter into ParamSpider directory.

root@kali:/home/iicybersecurity# cd ParamSpider/ root@kali:/home/iicybersecurity/ParamSpider#

- Next, use this command to install the requirements pip3 install -r requirements.txt

root@kali:/home/iicybersecurity/ParamSpider# pip3 install -r requirements.txt Collecting certifi==2020.4.5.1 Downloading certifi-2020.4.5.1-py2.py3-none-any.whl (157 kB) |████████████████████████████████| 157 kB 68 kB/s Requirement already satisfied: chardet==3.0.4 in /usr/lib/python3/dist-packages (from -r requirements.txt (line 2)) (3.0.4) Collecting idna==2.9 Downloading idna-2.9-py2.py3-none-any.whl (58 kB) |████████████████████████████████| 58 kB 33 kB/s Collecting requests==2.23.0 Downloading requests-2.23.0-py2.py3-none-any.whl (58 kB) |████████████████████████████████| 58 kB 78 kB/s Collecting urllib3==1.25.8 ======================================================================================================SNIP================================================================================================================================ Attempting uninstall: requests Found existing installation: requests 2.21.0 Uninstalling requests-2.21.0: Successfully uninstalled requests-2.21.0 Successfully installed certifi-2020.4.5.1 idna-2.9 requests-2.23.0 urllib3-1.25.8

- Now, use this command to find help options python3 paramspider.py -h

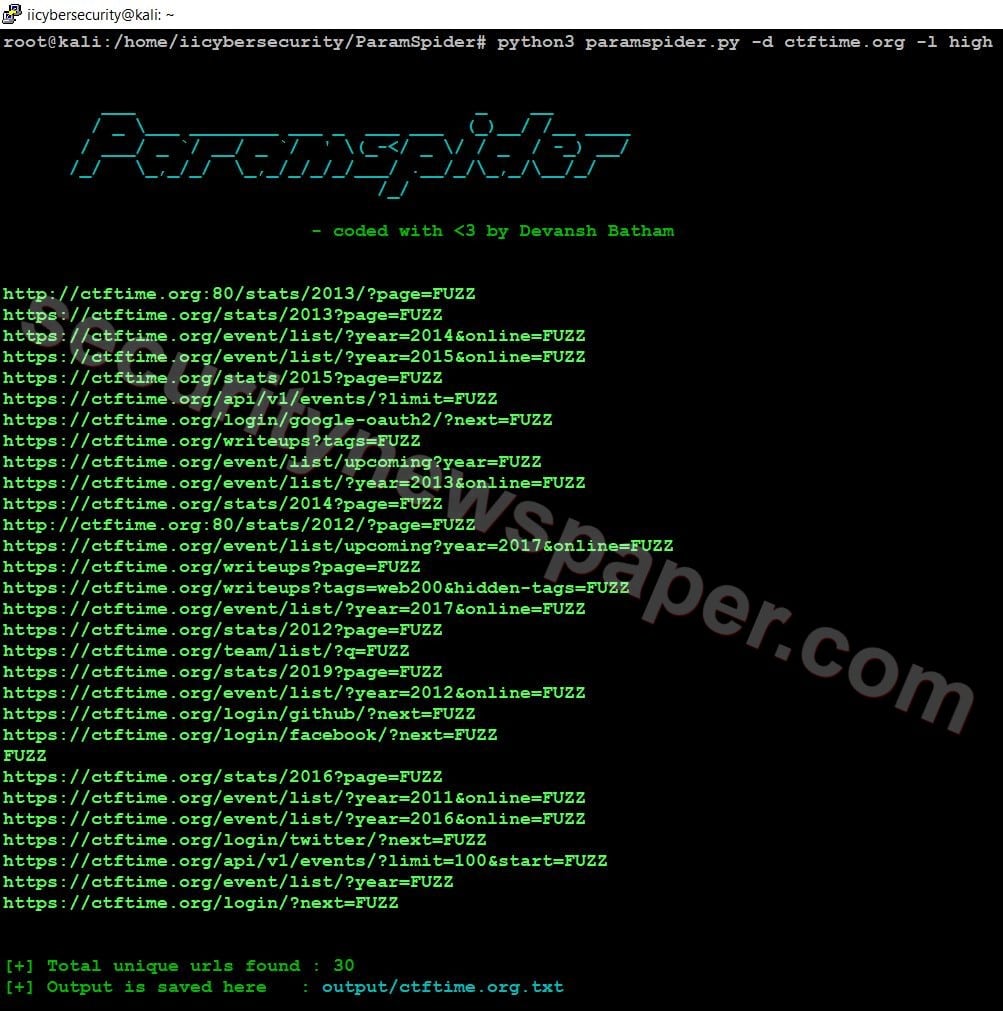

- Now, use this command to launch the tool python3 paramspider.py –domain <target.com> -l <level>.

- We can also set the output file name by using this command -o <file name>

- Successfully we got the target URLs with different parameters. As we can see above that we got all the parameters with FUZZ keywords, which can be replaced with our fuzzing characters.

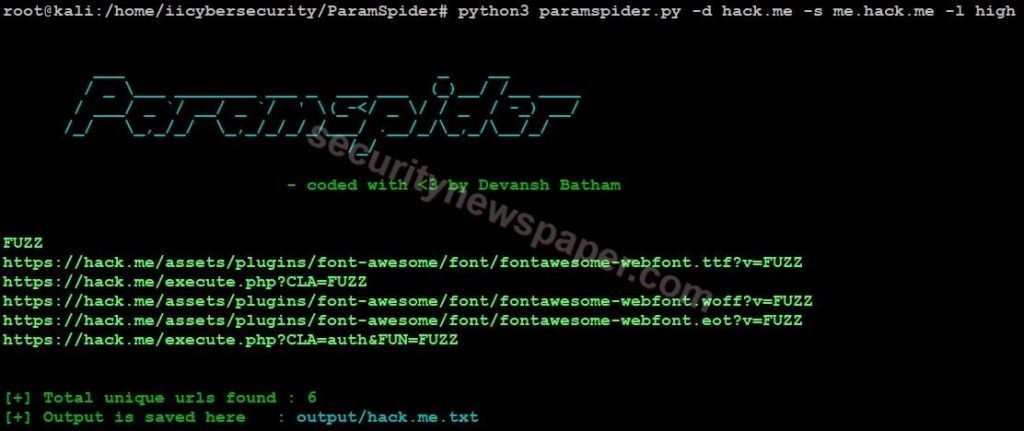

- We can also use the subdomain option scrap the target data by using this command python3 paramspider.py –domain <target.com> -s <sub domain>

- Here, we got six unique results of the target.

- Here, we can also set the specific parameter to scrap from the target domain. By using this command python3 paramspider.py –domain <target.com> -p <specific Parameter>

Conclusion

As we saw on how we can scrap the parameters from the target domain, using this we can find out the different parameters to FUZZ and help us find loopholes/vulnerabilities in the website.

Cyber Security Specialist with 18+ years of industry experience . Worked on the projects with AT&T, Citrix, Google, Conexant, IPolicy Networks (Tech Mahindra) and HFCL. Constantly keeping world update on the happening in Cyber Security Area.