In recent years, speaking to voice interfaces has become a normal part of our lives. We interact with voice-enabled assistants in our cars, smartphones, smart devices and during telephonic banking. More banks around the globe are using voice biometrics. In banks voice biometrics technology is used to match personal voice patterns and verify the customer’s identity in seconds using just voice. To identify a customer, voice biometrics technology captures a customer’s voice and compares the captured voice characteristics to the characteristics of a previously created voice pattern. If the two match, then the voice biometrics software will confirm that the customer speaking is the same as the customer registered against the voice pattern. Once the customer has created their voice authentication pattern, when they dial the bank, they just type their account, customer ID or card number and repeat the phrase “My voice is my password” or “My voice is my signature.” Then customers can access their telephone banking account, where they can make transactions more securely.

According to the different bank websites, voice biometrics is very secure and like the fingerprint, the voice is unique. But threat actors can use voice biometric spoofing attacks also known as voice cloning or deep fake to break into people’s bank accounts. Using these attacks they use presentation attacks including recorded voice, computer-altered voice and synthetic voice, or voice cloning, to fool voice biometric systems into thinking it hears the real, authorized user and grants access to sensitive information and accounts. In simple words they clone the voice of bank customers by artificially simulating a customer’s voice.

According to Atul Narula, a cyber security expert, today’s AI systems are capable of generating synthetic speech that closely resembles a targeted human voice. In some cases, the difference between the real and fake voice is imperceptible. Threat actors not only target public figures including celebrities, politicians and business leaders, but the reality is they can target anyone who has a bank account. They can use online videos, speeches, conference calls, phone conversations and social media posts to gather the data needed to train a system to clone a voice.

Cyber Criminals are using a new breed of phishing scams that exploit the fact that a victim believes they are talking to someone they trust. Last year, a UK-based CEO was tricked into transferring more than $240,000 based on a phone call that he believed was from his boss. These cyber criminals, armed with voice clones, are using phone calls and voicemail. And the attacks aren’t just threatening businesses. In a new breed of the “grandma scam” cyber criminals are posing as family members who need emergency funds.

Cyber criminals have started using deep fake voices to spread misinformation and fake news. Imagine if somebody publishes a fake voice call of some public figure to sway public opinion or consider how manipulated executive or public figure statements could affect the stock market. Recently some people appeared to be using deepfake technology to imitate some members of the Russian political class, mainly from opposition to Vladimir Putin’s government, to make fake video calls to some representatives of European parliaments.

Deepfakes are also being used to create fake evidence that impacts criminal cases. Or for blackmailing people in cases where manipulated video and audio of people doing or saying things they didn’t do or say.

HOW DEEP FAKE VOICE CLONING IS DONE?

Today, artificial intelligence and deep learning are advancing the quality of synthetic speech. With as little as a few minutes of recorded sample voice, developers can use it to train an AI voice model that can read any text in the target’s voice.

According to Atul Narula, a cyber security expert from International Institute of Cyber Security, there are a variety of AI tools, which enable virtually any voice to be cloned. Some of these are

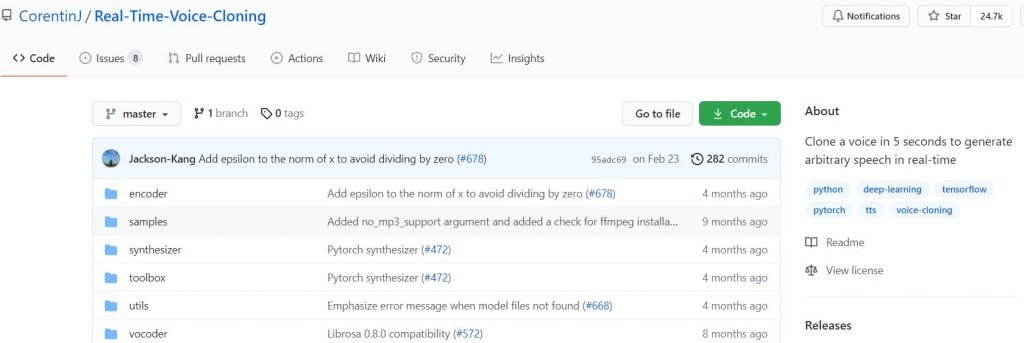

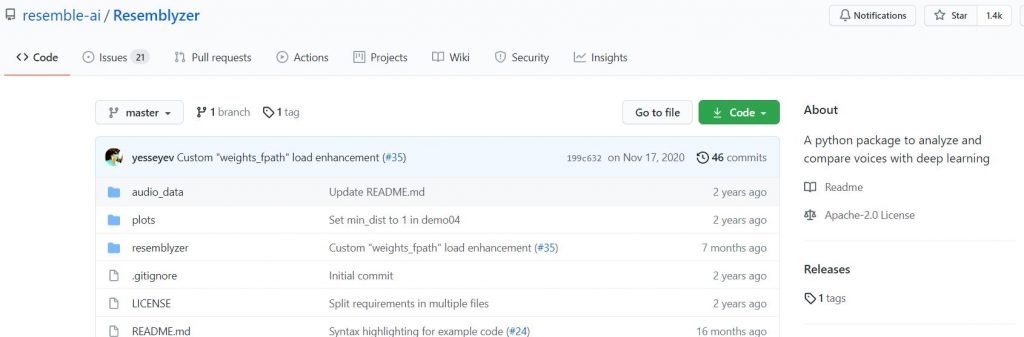

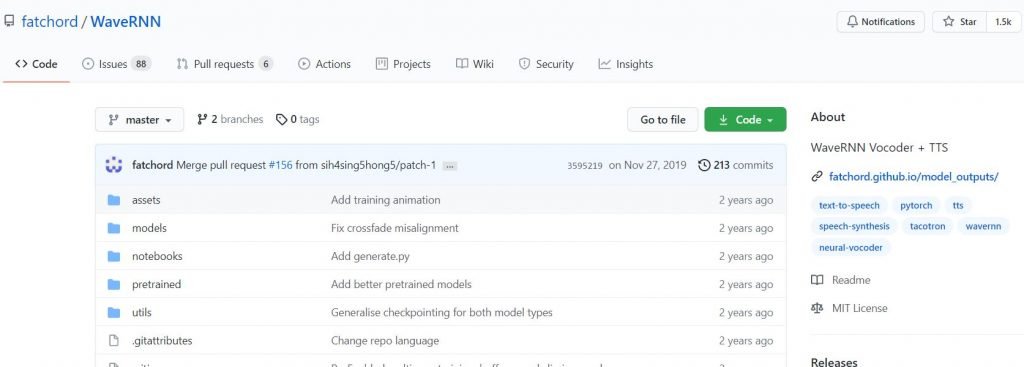

SV2TTS Real Time Voice Cloning, Resemblyzer and WaveRNN

There are some good free tools like Real Time Voice Cloning, Resemblyzer and WaveRNN which allow voice cloning with pre-trained models. While these can be used to generate speech using arbitrary text from one of a few hundred voices, it can also be fine-tuned to generate speech in an arbitrary voice using arbitrary text.

Resemble.AI

Allows custom AI Generated voices from a speech source. It creates realistic text to speech voices with AI with just 5 minutes of sample voice. You can try it for free.

iSpeech

It is a high quality text to speech and speech recognition tool. You can generate anybody’s voice in 27 languages.

Descript – Overdub – Lyrebird AI

Allows creating a digital voice that sounds like you just from a small audio sample. It has a free plan that allows generating 3 hours of speech.

Vera Voice

It uses machine-learning technology to create super realistic voice clones of any person. They claim that they need just an hour of audio data to train neural networks to generate a new voice.

Google’s Tacotron – Wavenet

These systems from Google can generate speech which mimics any human voice and which sounds more natural. It needs text and sample voice data to generate a human-like voice.

Although voice samples are difficult to obtain, cyber criminals use social media to obtain them.

It’s important to note that these tools were not created for the purpose of fraud or deception, mentions Atul Narula. But the reality is that business and consumers need to be aware of new threats associated with online AI voice cloning software.

Banks are forcing customers to activate voice biometrics. Banks use different phrases, like “my voice is my password”, or “my voice is my signature”. To verify user identity users have to enter their account number or Customer ID or 16 digit card number and their voice authentication phrase. Account number is kind of public as it is on cheque book and threat actors can ask someone their account number to deposit some amount via social engineering and people will happily give their account number.

There are three scenarios that someone can use to hack into a voice authentication system used by many banks.

- In the first scenario Someone calls you to sell something and forces you to use certain words during the call Like: “Yes”, “My Voice”, “Signature”, “Password”, “Username”, “No”, and the name of your bank. And later on creates the phrase using the words and plays the recording during the telephone banking call.

- In the second scenario someone calls you and asks you to repeat the entire phrase “my voice is my signature” and later on plays the recording during the telephone banking call.

- Third scenario is someone calls you and records a sample of your voice and by using Deep Fake artificial intelligence tools mentioned before generates the complete phrase or the missing words. These tools are not perfect yet but they can generate a voice similar to your voice, and with just a sample of a few minutes they can generate the phrase.

Using these three scenarios, a cyber security expert from International Institute of Cyber Security recorded a call and later on with the help of audio editing software, created the entire phrase. He then played the recorded audio during a telephonic banking call. Using this technique he was easily able to break into banks telephonic banking sessions. He used the same technique for generating the English and Spanish phrases. It seems voice authentication systems are vulnerable to voice cloning attacks and threat actors could break into anybody’s account just by having the account number or customer ID and some social engineering to perform any of the scenarios mentioned before. See the video to see the POC.

IS IT POSSIBLE TO DETECT VOICE CLONING?

Mariano Octavio, a cyber security investigator mentions that voice cloning technology is not an evil technology. It has many positive and exciting use cases like.

Education: Cloning the voices of historical figures offers new opportunities for interactive teaching and dynamic storytelling in museums.

Audiobooks: Celebrity voices can be used to narrate books and historical figures can tell their stories in their own voices.

Assistive Technology: Voice cloning can be used to assist persons with disabilities or health issues that impact their speech.

According to Jitender Narula, a cyber security expert from International Institute of Cyber Security, Voice anti-spoofing, also called voice liveness detection, is a technology capable of distinguishing between live voice and voice that is recorded, manipulated or synthetic.

For advanced voice biometrics, interactive Liveness Detection is used – when a person is asked to say a randomly generated phrase. The current capabilities of neural networks allow bypassing interactive liveness detection.

Experts understand the risks associated with the biometric systems, and are beginning to resort to a multimodal approach – when several types of biometrics are embedded in the identification system. Like facial recognition and voice recognition.

But it seems banks don’t have this technology as voice authentication used by many banks can be hacked as shown in the video.

Atul Narula mentions that there are a lot of risks associated with biometric authentication. Companies & Financial institutions need to focus attention on the development of advanced deep fake detection solutions. On the other hand we should focus on raising awareness and educating consumers of social media about the risk associated with the deepfake technology.

Information security specialist, currently working as risk infrastructure specialist & investigator.

15 years of experience in risk and control process, security audit support, business continuity design and support, workgroup management and information security standards.